*Update: As of Aug. 18th, 2025 All 50 states have introduced AI-related legislation.

Based on an Inclusive Score post and official sources from California Civil Rights Council.

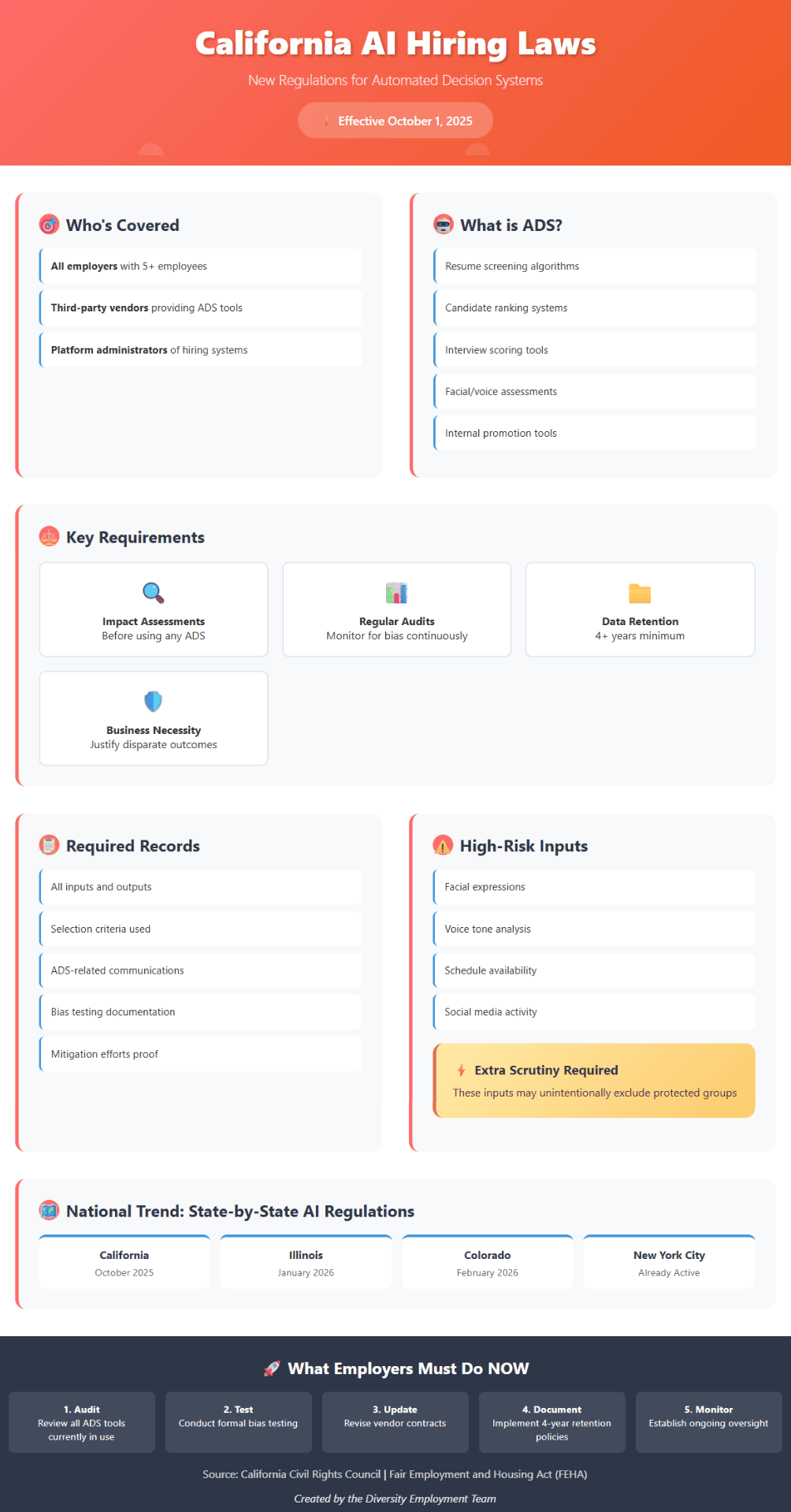

Starting October 1, 2025, California will formally bring AI-based hiring tools under anti-discrimination laws.

New regulations finalized by the California Civil Rights Council amend Title 2 of the Fair Employment and Housing Act (FEHA) to include automated decision systems (ADS), tools that use algorithms, AI, or predictive models to assist or make employment decisions.

These rules apply to:

-

All employers with five or more employees

-

Any third-party vendors or platforms providing or administering ADS tools

What Counts as an ADS?

According to the law, an ADS is any computational system that helps determine employment outcomes… Including:

- Resume screening

- Candidate ranking

- Internal promotions

- Interview scoring

- Facial or voice-based assessments

FEHA protections now apply across a broad set of HR activities, including:

- Recruitment

- Evaluation

- Hiring

- Interviews

- Pay

- Leave

- Promotions

- Benefits

- Training

Employer Obligations Under FEHA

Anti-Discrimination & Business Necessity

ADS must not produce disparate outcomes for protected groups unless those outcomes are:

-

Justified by business necessity, and

-

No less-discriminatory alternative exists

Employers are expected to:

-

Conduct impact assessments before using an ADS

-

Regularly audit tools to monitor for bias

Certain inputs like facial expressions, schedule availability, or voice tone may require extra scrutiny, as they could unintentionally exclude individuals based on religion, disability, or other protected traits.

Data Retention & Recordkeeping

Employers must maintain:

-

Inputs and outputs

-

Selection criteria

-

ADS-related communications

…for a minimum of four years after use or relevant personnel action.

Vendor Contracts & Liability

Third-party tools do not shield employers from liability. Vendors are treated as agents, and employers are responsible for their compliance.

Contracts should clearly assign:

-

Compliance duties

-

Indemnification for risk

-

Access to audit or documentation materials

Affirmative Defense

Employers may avoid liability if they can demonstrate:

-

Pre-use bias testing

-

Ongoing oversight

-

Documented mitigation efforts

This “affirmative defense” gives employers a legal path to show good-faith efforts, though final responsibility remains with them.

Why It Matters

These regulations mark a massive shift in how hiring tech is treated under civil rights law. By applying the same anti-discrimination standards to both human and machine decision-makers, California is signaling that technology is not exempt from accountability.

For employers, this means:

-

Accountability is shared: Whether a decision is made by a recruiter or by a resume screening algorithm, both must meet fairness and compliance benchmarks.

-

Proactive testing isn’t optional: Documenting anti-bias efforts isn’t just smart policy, it can serve as a legal defense.

-

Transparency is rising in value: Job seekers, regulators, and the public increasingly expect clarity around how decisions are made.

For job seekers and DEI advocates, it proves that tech cannot and will not be a shield for bias, even if it’s done unintentionally. The systems we use to evaluate people need to be just as fair, inclusive, and explainable as the people who design, use, or deploy them.

The Broader Trend

California’s move to regulate AI-driven employment tools is part of a growing national shift toward governing algorithmic fairness in the workplace. States and cities across the U.S. are developing their own frameworks to address the risks of bias and discrimination in automated decision systems (ADS). Here’s what we’re seeing:

-

California: ADS regulation under FEHA, effective October 2025

- Illinois: Amended Human Rights Act banning biased ADS effective January 2026

- Colorado: Comprehensive AI law sets risk and transparency standards effective February 2026

- New York City: Local Law 144 mandates bias audits and candidate notice policies

This patch-work of new rules hints at AI compliance becoming a standard feature of HR and legal strategy nationwide. While rules differ across jurisdictions, the message is clear: automated hiring is now regulated territory.

Given California’s size and legal influence, other states may soon follow.

What Employers Should Do Right Now

- Audit every ADS used in hiring or internal decision-making

- Conduct regular formal bias testing, and keep the evidence

- Update your vendor contracts to assign compliance responsibility to them

- Implement data retention policies for any and all ADS use (Minimum: 4 years)

- Ensure oversight at all decision points made by AI or algorithms

To Sum it Up

California’s updated FEHA rules represent a major turning point in employment law. Employers now need to apply the same scrutiny, oversight, and fairness to AI and algorithms as they would to any other hiring method.

Full regulatory text and details available via the California Civil Rights Council.